You’re going to love this. Availability and Performance features have always been the strong point of vSphere and what we all fell in love with. VMware has always made sure to make improvements in this area and this version is no different.

There are improvements in four major areas:

- High Availability (HA)

- Fault Tolerance (FT)

- Distributed Resource Scheduling (DRS)

- Storage I/O Control (SIOC)

Let’s take a look at the improvements individually.

High Availability (HA)

This is probably the area of most improvement. Some major work has gone into the High Availability side of things; some to make things simpler while others to provide more features. Here they are in turn:

Simplified Admission Control

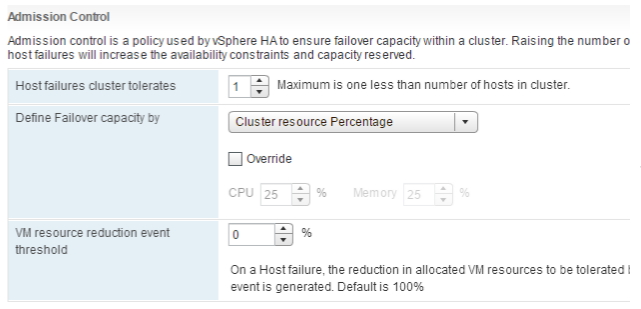

Configuration of HA wasn’t very complicated to start with but accurately calculating how much protection would be there at the end of it, was a little difficult. With vSphere 6.5, the idea is to define how many hosts you can tolerate to fail within the cluster and the relevant calculations are done by the service itself. It’s based on the percentage of resources reserved admission control policy, which is the default now and override settings can also be defined easily.

Another improvement is in the area of automatic restarts. Previously, HA would restart the VM even if that meant impact on performance. Now, a warning will be issued if it senses that performance degradation would occur as a result of that restart.

HA Restart Priorities

VM Restart priorities determine how the cluster will allocated resources to restarting VMs, according to their resource priorities.

At this point, I think it’s best to mention that there are two new restart priority options being introduced with vSphere 6.5: Highest and Lowest. I think it’s a very good idea because sometimes, just three levels felt a bit restrictive.

Another point to note is that lower priority machines might be prevented by Admission Control when it comes to restarting it.

HA Orchestrated Restart

One highly awaited feature was for HA to restart virtual machines in a particular order. This is particularly important for multi-tiered applications where there are dependencies on particular services to be available before the next one can come online.

It’s great to see that functionality finally being delivered with this release. Now, VM to VM dependency chains can be defined, that dictate the order in which the machines come up and the process is further strengthened by the definition of validation checks. It looks for circular dependency rules, both within and outside the priority group.

Similar to how vApps work, configuration can be done to either wait for VMware Tools to come up or wait for a particular amount of time, before the next group of machines is started.

Proactive HA

Yet another feature that was much awaited. Sometimes, it’s desired to proactively move VMs to another host if the current host start experiencing partial failure. Of course, this feature relies heavily on monitoring solutions for the vendors involved but nevertheless, in most cases, it could prevent having to cold boot VMs on another host by a triggered HA failover.

In addition to that, a new host state is being introduced now: Quarantine Mode. If a host enters degraded state (triggered by vendor monitoring tools), it’s classified as having either “Moderate degradation” state or “Severe degradation” state but both mean it’s goes into Quarantine Mode.

Based on the level of degradation, DRS may decide only to use that host i.e. not evacuate the VMs, if the demands for this VM or the business rules set cannot be satisfied by any other host. Again, this is another example of being proactive in protecting VM availability and gives users greater flexibility in defining their rules.

Fault Tolerance (FT)

When it comes to Fault Tolerance, the improvements are more feature enhancements, rather than something completely new. That said, they are still very important in further improving this extremely useful feature.

First is improved DRS integration. When bringing up a set of machines, FT takes advantage of DRS in determining where the machines should be placed. Network bandwidth available is also taken into account when deciding the placement.

Secondly, there are performance improvements. Major gains have been made in the TCP stack and as a result, performance has increased of pretty much all operations. Another major benefit is the introduction of multi-NIC aggregation. With the increased bandwidth available to FT, it opens the way for having more FT-enabled VMs on the same host, if required.

Distributed Resource Scheduling (DRS)

This is such a feature that it’s hard for VMware to find a way to improve it but there always are feature requests from users, who badly need something and then it makes into the product. There are a few settings and a new feature to talk about

DRS Policies

There are times when a cluster looks a bit disbalanced in terms of VM distribution. That typically happens when the hosts in a cluster are not particularly stressed so based on the settings, DRS doesn’t feel like moving the VMs unnecessarily. But that causes the danger of losing more VMs if a particular hosts dies suddenly. So, it would be nice to have an exception and move them if they don’t feel particularly balanced in terms of VM placement.

That has now been made possible by introducing a tickbox that does just that. Select it and DRS will try to evenly distribute the VMs so that there aren’t too many eggs in one basket.

Another great new setting is the instruction to DRS to consider all consumed memory when making decisions to move a VM. By default, it uses Active Memory + 25%.

There is also protection now against CPU over-commitment i.e. number of VMs per CPU. Depending on this setting, the host will stop powering on more VMs if the threshold is being breached. This is particularly useful for VDI environments.

Network-Aware DRS

In terms of DRS features, another very important improvement is the introduction of Network-Aware DRS. Being a critical resource which has a major impact on VM performance, it’s about time it was introduced in DRS calculations.

So, as one would expect, this feature looks at host network saturation for the physical uplinks and avoids placing VMs on a host that has an over-subscribed network. That said, it’s not a guaranteed refusal to move to it as it’s better to have some networking than none at all! For that reason, CPU and Memory still take priority over network when DRS is making those decisions.

Storage I/O Control (SIOC)

There aren’t any changes in SIOC in terms of how it works. However, the management of it has some improvements and that’s all to do with making it easier to implement.

The change is that now, it’s managed via Storage Policy Based Management (SPBM). In the same way as other storage policies are defined, you can now set policies to control SIOC as well and the limits are enforced using IO Filters.

Conclusion

I am sure you’ll agree that there are many new features (and improvement to existing ones) when we talk Availability and Performance in vSphere 6.5. Not only it makes the life of an admin easier but gives an architect more options to choose from.

All these improvement gives the assurance to customers that VMware is not resting on its laurels and is still as committed to improving the products as ever.

Disclaimer: As of writing (October 17, 2016), this information is correct and while it’s hard to imagine these features to change between now and release, there is always a possibility.

Leave A Comment